Prompt Engineering vs. Context Engineering: Crafting AI for Precision

By: Dominick Romano and Chris Gaskins

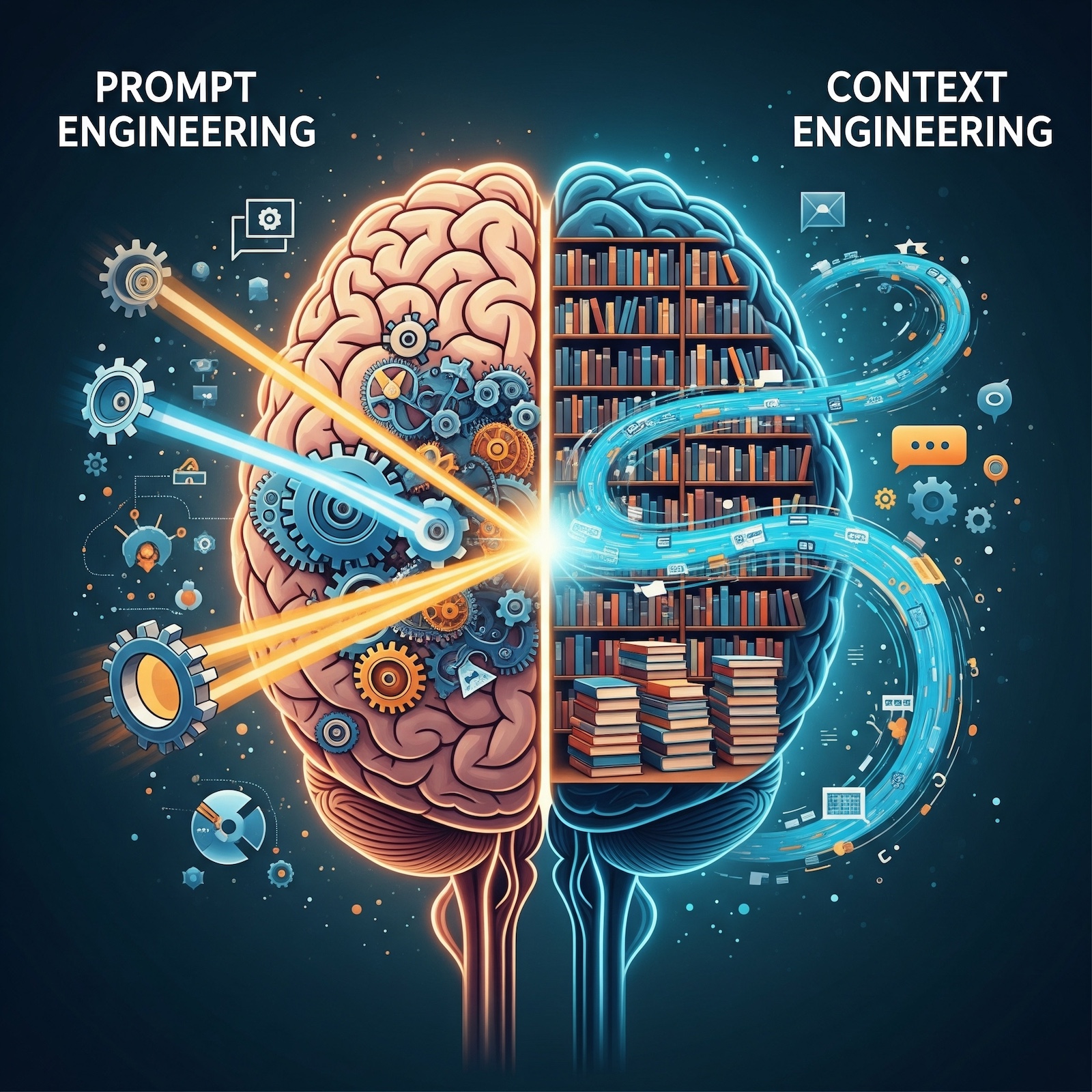

In the rapidly advancing world of artificial intelligence, two key techniques—Prompt Engineering and Context Engineering—are essential for optimizing interactions with language models. While distinct, these practices are deeply interdependent, shaping AI behavior through complementary approaches. This article explores their definitions, core methodologies, and dynamic relationship, highlighting how they work together to deliver precise, task-specific outcomes.

Understanding Prompt Engineering

Prompt Engineering is the discipline of designing precise, effective inputs (prompts) to guide an AI model toward a desired response. It focuses on crafting the art of asking by optimizing the structure, clarity, and intent of the instruction. Prompting techniques are methods to craft instructions for AI models to get better, more accurate responses. These 18 core techniques, grouped by purpose, help general technology users and engineers communicate effectively with AI, from simple questions to complex problem-solving.

Basic Prompting

Techniques that provide straightforward instructions to the AI with minimal setup.

- Zero-Shot Prompting: The model completes a task based solely on the instruction, without any examples to guide it.

Example: “Write a 50-word summary of a news article about climate change.” - Few-Shot Prompting: The model learns a task pattern by following a few provided examples.

Example: “Classify: ‘I love this!’ → Positive; ‘This is terrible’ → Negative; ‘It’s okay’ → ?”

Reasoning-Based

Techniques that encourage the AI to think through problems systematically or explore multiple solutions.

- Chain-of-Thought (CoT) Prompting: The model is asked to explain its reasoning step-by-step to solve a problem accurately.

Example: “Calculate 15% of $50 by breaking it down step-by-step.” - Tree of Thoughts (ToT) Prompting: The model explores several possible solutions at once, like branching paths, to find the best answer to a complex problem.

Example: “To plan a budget-friendly vacation, evaluate three destinations and choose the most affordable option.” - Multimodal CoT Prompting: The model reasons step-by-step using both text and images to solve a problem.

Example: “Using a photo of a grocery receipt, calculate the total cost of milk and bread step-by-step.” - ReAct Prompting: The model alternates between reasoning about a step and taking an action, like searching, to solve a problem.

Example: “To find the population of Tokyo, think ‘I need the latest data,’ search for it, then report the number.”

Tool-Augmented

Techniques that leverage external tools or data sources to enhance the AI’s responses.

- Retrieval Augmented Generation (RAG) Prompting: The model uses up-to-date information from an external source, like a database or the web, to answer a question.

Example: “Using recent weather data, what is the current temperature in New York City?” - Automatic Reasoning and Tool-use (ART) Prompting: The model chooses and uses tools, like a calculator or web search, to answer a question accurately.

Example: “To find the square root of 144, use a calculator and provide the result.” - Program-Aided Language Models (PAL) Prompting: The model writes and runs code to calculate or solve a problem, then uses the result to answer.

Example: “To find the average of 10, 20, and 30, write and run code to compute it.”

Self-Improving

Techniques that enable the AI to refine its own outputs or prompts for better performance.

- Self-Consistency Prompting: The model answers the same question multiple times and selects the most common response for accuracy.

Example: “Ask ‘What is the boiling point of water?’ three times and choose the answer given most often.” - Reflexion Prompting: The model reviews its own answer, spots errors, and improves it in the next try.

Example: “After writing a summary that’s too short, the model critiques it and adds more details.” - Automatic Prompt Engineer (APE) Prompting: An AI creates and tests different prompts to find the most effective one for a specific task.

Example: “Generate five prompts to summarize a book and select the one that produces the clearest summary.”

Advanced Structuring

Techniques that use structured approaches or human interaction to guide complex tasks.

- Meta Prompting: The model, acting as an expert, designs a detailed prompt to guide itself or another AI in completing a specific task.

Example: “As a chef, create a prompt to generate a 5-day vegetarian meal plan for two people.” - Prompt Chaining Prompting: A complex task is split into a series of smaller, connected prompts to guide the model step-by-step.

Example: “Prompt 1: Create a to-do list for organizing a birthday party. Prompt 2: Write an invitation based on the to-do list.” - Active-Prompt Prompting: The model flags unclear cases and asks a human for clarification to improve its response.

Example: “For the review ‘This phone is fire!’, ask a human if it’s positive or negative.” - Directional Stimulus Prompting: The prompt includes hints or keywords to guide the model toward a specific type of response.

Example: “Write a product description for a laptop. Keywords: sleek, powerful, affordable.” - Graph Prompting: The model uses a graph to map relationships between items and answer questions about their connections.

Example: “Using a graph of a company’s teams, find which employees work on both Project X and Project Y.” - Generate Knowledge Prompting: The model gathers and lists relevant facts about a topic before providing a final answer.

Example: “List three facts about solar energy, then explain its benefits for homeowners.”

Understanding Context Engineering

Context Engineering manages the context window—the finite amount of data (in tokens) an AI model can “remember” at any given moment. This includes prior conversation turns, user-provided documents, and data from external sources, ensuring the model has relevant information to interpret prompts accurately.

Core Context Engineering Techniques:

- Conversation History Management: Curates dialogue by including or omitting past messages (e.g., removing an off-topic exchange to refocus the model).

- Contextual Priming: Provides upfront text as a knowledge base (e.g., “The EV market grew 40% in 2023 due to batteries” before asking for 2024 trends).

Advanced Context Engineering: Retrieval-Augmented Generation (RAG)

The pinnacle of context engineering is Retrieval-Augmented Generation (RAG), which connects the model to a live knowledge base (e.g., a company’s internal wiki). The workflow includes:

- Search: Queries the knowledge base for data relevant to the user’s input.

- Augment: Injects retrieved documents into the context window.

- Generate: Uses the new, factual context to produce an accurate answer.

From Theory to Practice: API Implementation

These concepts are implemented in APIs like OpenAI’s /chat/completions endpoint. The message list forms the context, with roles reflecting both disciplines:

- system & user Messages: Primarily Prompt Engineering, crafting instructions and personas.

- assistant & tool Messages: Primarily Context Engineering, managing response history and external data.

The Synergy of Prompt and Context Engineering

Prompt and Context Engineering are deeply synergistic. A perfect prompt is useless without the right data, and a rich context is wasted on a vague prompt. The power lies in using them together. For example, a RAG system (Context Engineering) provides factual sales data, while a Chain-of-Thought prompt (Prompt Engineering) provides precise instructions: “Analyze this data step-by-step to recommend which product line to expand.”

| Prompt Engineering | Context Engineering | |

| Primary Goal | To craft the perfect question or instruction (“The How”). | To provide the perfect information environment (“The What”). |

| Scope | Narrow: Focuses on the content and structure of the input prompt. | Broad: Manages the entire context window and external data. |

| Guiding Question | “Am I asking this in the clearest, most effective way?” | “Does the model have the necessary factual data to answer?” |

| Core Methodologies | Zero-Shot, Few-Shot, Chain-of-Thought (CoT), Role Assignment. | Conversation History Management, Contextual Priming, Retrieval-Augmented Generation (RAG), Function Calling. |

| Example API Role | Content of system and user messages. | Management of assistant and tool messages; the overall message array. |

Conclusion

Effective AI development hinges on mastering both Prompt Engineering and Context Engineering. Prompt engineering focuses on crafting precise, intent-driven queries, while context engineering, often utilizing techniques like Retrieval Augmented Generation (RAG), builds a comprehensive information environment. The combination of sophisticated prompting and robust context management allows developers to create highly accurate AI solutions for intricate challenges. The future of powerful and reliable AI systems will largely depend on the expansion and leverage of these two critical disciplines.

Sources

- Zero-Shot Prompting: Brown, T. B., et al. (2020). “Language Models are Few-Shot Learners.” Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/2005.14165

- Few-Shot Prompting: Brown, T. B., et al. (2020). “Language Models are Few-Shot Learners.” Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/2005.14165

- Chain-of-Thought (CoT) Prompting: Wei, J., et al. (2022). “Chain of Thought Prompting Elicits Reasoning in Large Language Models.” Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/2201.11903

- Tree of Thoughts (ToT): Yao, S., et al. (2023). “Tree of Thoughts: Deliberate Problem Solving with Large Language Models.” arXiv preprint. https://arxiv.org/abs/2305.10601

- ReAct: Yao, S., et al. (2022). “ReAct: Synergizing Reasoning and Acting in Language Models.” arXiv preprint. https://arxiv.org/abs/2210.03629

- Multimodal CoT: Zhang, Z., et al. (2023). “Multimodal Chain-of-Thought Reasoning in Language Models.” arXiv preprint. https://arxiv.org/abs/2302.00923

- Retrieval Augmented Generation (RAG): Lewis, P., et al. (2020). “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/2005.11401

- Automatic Reasoning and Tool-use (ART): Paranjape, A., et al. (2023). “ART: Automatic Multi-step Reasoning and Tool-use for Large Language Models.” arXiv preprint. https://arxiv.org/abs/2303.09014

- Program-Aided Language Models (PAL): Gao, L., et al. (2022). “PAL: Program-Aided Language Models.” arXiv preprint. https://arxiv.org/abs/2211.10435

- Self-Consistency: Wang, X., et al. (2022). “Self-Consistency Improves Chain of Thought Reasoning in Language Models.” arXiv preprint. https://arxiv.org/abs/2203.11171

- Automatic Prompt Engineer (APE): Zhou, Y., et al. (2022). “Large Language Models Are Human-Level Prompt Engineers.” arXiv preprint. https://arxiv.org/abs/2211.01910

- Reflexion: Shinn, N., et al. (2023). “Reflexion: Language Agents with Verbal Reinforcement Learning.” arXiv preprint. https://arxiv.org/abs/2303.11366

- Meta Prompting: Zhang, J., et al. (2024). “Meta-Prompting: Enhancing Language Models with Task-Agnostic Scaffolding.” arXiv preprint. https://arxiv.org/abs/2401.12954

- Generate Knowledge Prompting: Liu, J., et al. (2022). “Generated Knowledge Prompting for Commonsense Reasoning.” arXiv preprint. https://arxiv.org/abs/2110.08387

- Prompt Chaining: Wu, T., et al. (2022). “PromptChainer: Chaining Large Language Model Prompts through Visual Programming.” CHI Conference on Human Factors in Computing Systems. https://arxiv.org/abs/2203.06566

- Active-Prompt: Diao, S., et al. (2023). “Active Prompting with Chain-of-Thought for Large Language Models.” arXiv preprint. https://arxiv.org/abs/2302.12246

- Directional Stimulus Prompting: Li, Y., et al. (2023). “Prompting with Directional Stimuli: Guiding Large Language Models with Subtle Hints.” arXiv preprint. https://arxiv.org/abs/2306.07126

- Graph Prompting: Liu, X., et al. (2023). “GraphPrompt: Graph-Structured Prompting for Large Language Models.” arXiv preprint. https://arxiv.org/abs/2307.04993