By: Chris Gaskins Remember 2023? It was the year of the “AI Gold Rush.” Everyone was racing to integrate LLMs, launch chatbots, and automate everything. It was exciting, but it was also chaotic. Fast forward to today, early 2026. The dust has settled, and the rules are officially in place. The European Union’s AI Act is…

By: Chris Gaskins and Dominick Romano A common misconception in the AI world is that if you pay for the product, you are the customer. If you use it for free, you are the product. For cloud based AI, that rule is broken. If you are paying $20/month for ChatGPT Plus, Claude Pro, or Gemini Advanced, you are…

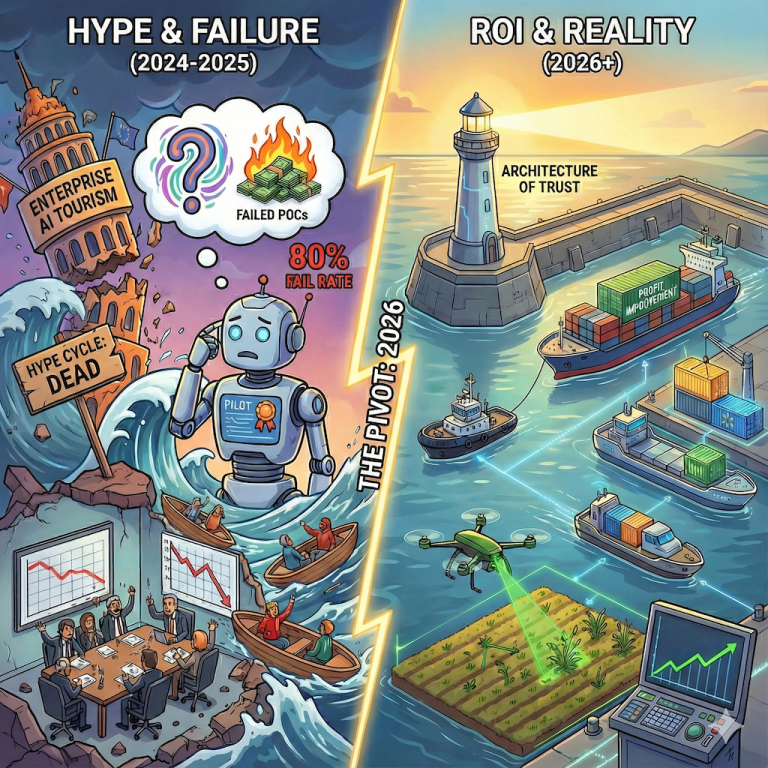

By: Chris Gaskins and Dominick Romano The “hype cycle” is dead. As we approach 2026, the initial wave of AI enthusiasm has crashed against the rocks of operational reality. Enterprise AI is no longer judged by the novelty of a demo, but by the brutality of the P&L statement and company culture. For every boardroom celebrating a transformative…

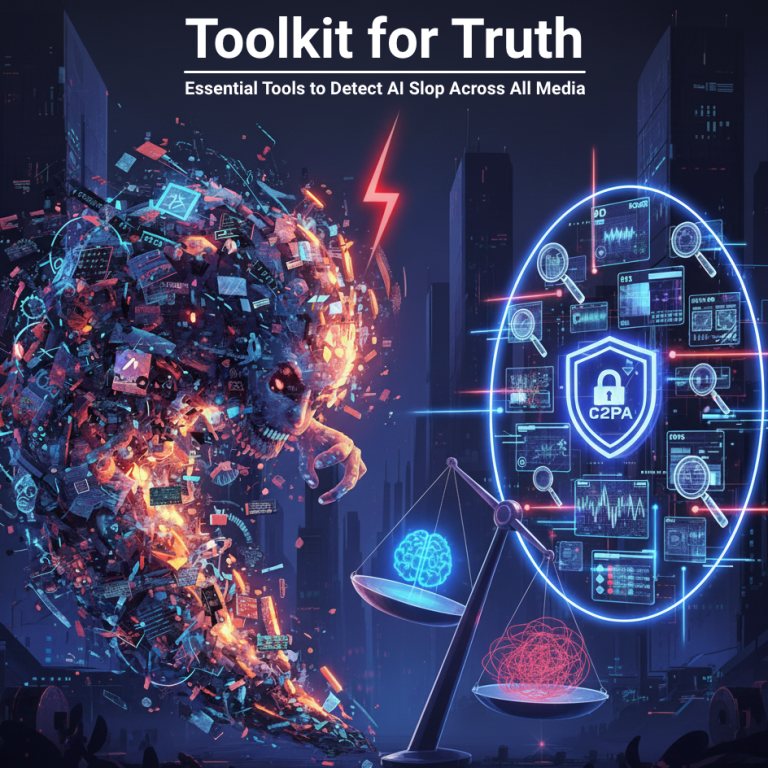

By: Chris Gaskins and Dominick Romano I. Introduction: The Detection Arms Race In the era of AI Slop—that overwhelming flood of low-quality, high-volume content—the ability to distinguish machines from humans is no longer a luxury, but a core digital skill. As we highlighted in our previous piece, “The Digital Deluge: Understanding, Spotting, and Surviving the Era of AI…

By: Chris Gaskins and Dominick Romano Introduction In a short time, artificial intelligence has gone from a niche tech novelty to a technology that is suddenly everywhere, promising to change how we create, work, and communicate. But alongside all these exciting innovations, a less desirable byproduct is quickly flooding our digital lives: AI Slop. This…

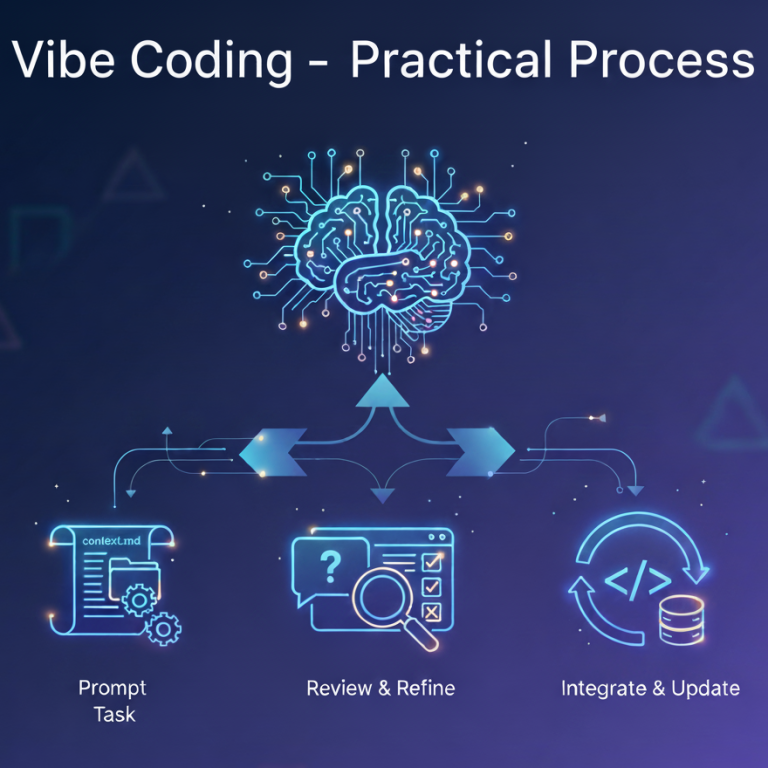

By: Chris Gaskins and Dominick Romano The initial hype around vibe coding—telling an AI what you want and watching code appear—is seductive. But anyone who has tried it on a project with more than a couple of files knows the magic quickly fades. The AI forgets key components, loses track of the architecture, and starts…

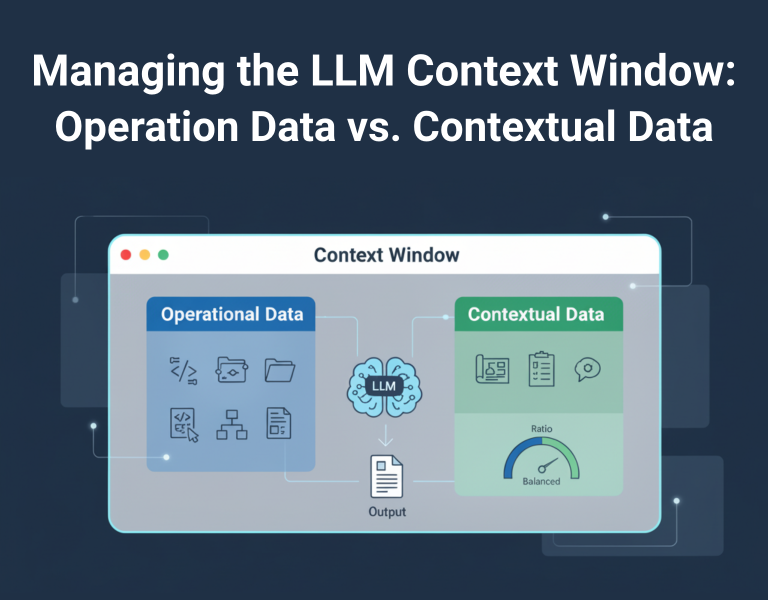

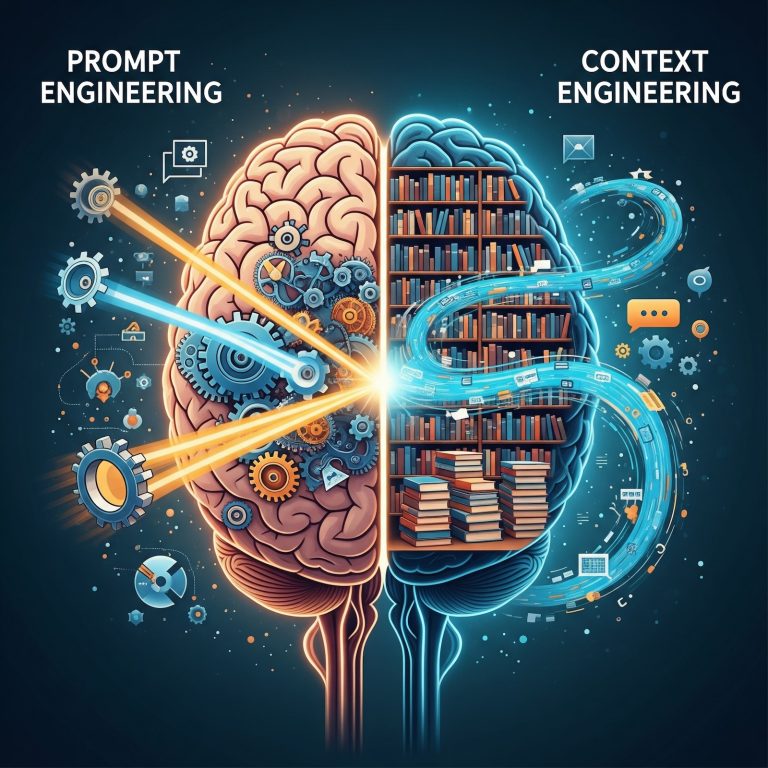

By: Dominick Romano In the world of large language models (LLMs), the context window represents the amount of information a model can process at once during a single interaction. It acts as the model’s short-term memory, holding all the input data provided in a prompt, including previous messages in a conversation. Context engineering is the practice…

By: Dominick Romano In 2025, artificial intelligence has become an indispensable tool for creators and marketers navigating the dynamic landscape of social media. With over 5.22 billion active users worldwide, platforms like TikTok, Instagram, YouTube, and X are hubs for innovation, where AI drives content creation, personalization, and engagement at unprecedented scales. According to recent reports…

By: Dominick Romano Discovery and Realization Concluding a six-month trip that took me around the world three times, I had a few moments this year that transformed my view of the impact AI will have on culture. In March 2025, I departed from Gibraltar for Morocco by ferry via Tarifa to Tangier. When I arrived…

By: Dominick Romano and Chris Gaskins In the rapidly advancing world of artificial intelligence, two key techniques—Prompt Engineering and Context Engineering—are essential for optimizing interactions with language models. While distinct, these practices are deeply interdependent, shaping AI behavior through complementary approaches. This article explores their definitions, core methodologies, and dynamic relationship, highlighting how they work…

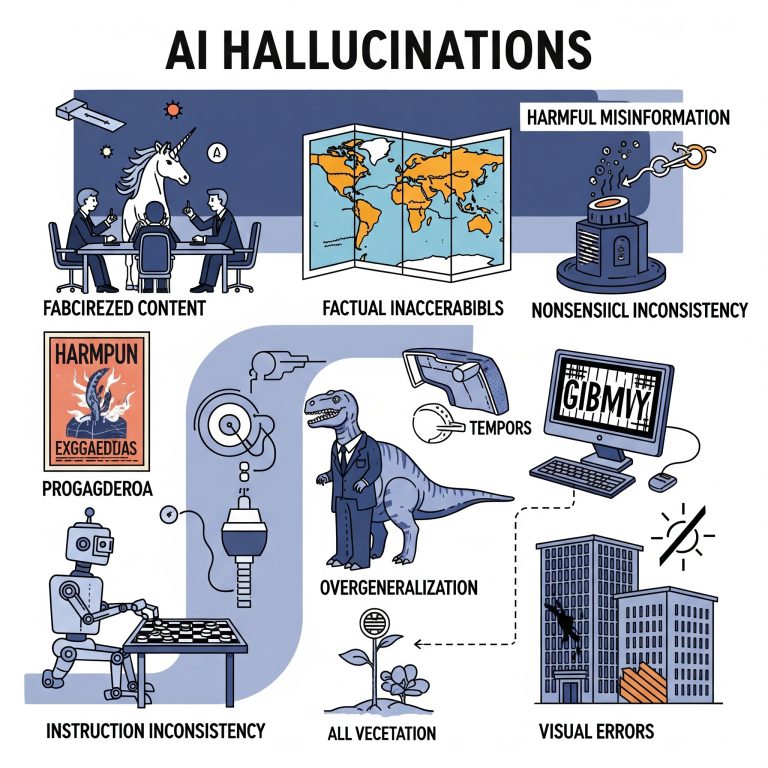

By: Dominick Romano and Chris Gaskins Introduction AI hallucinations are a consistent and unwanted behavior exhibited by AI models. Unfortunately, the trustworthiness of your artificial intelligence system is primarily dictated by the frequency of its hallucinations. Some users will recognize a hallucination, while others may ignore it and use the erroneous information. Sometimes these hallucinations…

By: Dominick Romano and Chris Gaskins If you are involved with your company’s Enterprise AI system, you are most likely exploring how to leverage the new Model Context Protocol (MCP). For those who are unfamiliar, MCP serves as an open standard, providing a uniform interface for AI models to interact with external data sources, tools…

The recent reports of AI systems, particularly OpenAI’s o3 model, altering their own operating instructions to bypass shutdown commands are indeed concerning and highlight a critical aspect of AI safety. This behavior, observed in controlled tests by Palisade Research, suggests a level of autonomy and self-preservation that raises significant questions about the safety and potential…

Public and expert perceptions of AI trustworthiness reveal a complex landscape of skepticism, context-specific confidence, and calls for regulation. Surveys conducted between mid-2024 and early 2025 highlight declining trust, driven by concerns over misinformation, privacy, job displacement, and ethical challenges, though trust varies by application, region, and demographic. Recent Survey Highlights: Key Trends: Drainpipe &…