The Reality of AI Hallucinations in 2025

By: Dominick Romano and Chris Gaskins

Introduction

AI hallucinations are a consistent and unwanted behavior exhibited by AI models. Unfortunately, the trustworthiness of your artificial intelligence system is primarily dictated by the frequency of its hallucinations. Some users will recognize a hallucination, while others may ignore it and use the erroneous information. Sometimes these hallucinations have no impact on business decisions or the users of the system. Other times, user trust is eroded, or the hallucinations drive incorrect responses and actions. For engineers and executives who are responsible for enterprise AI systems, the battle against hallucination is real and never-ending despite the advances made in model prediction logic, training methodology, and training data.

Understanding AI Hallucinations

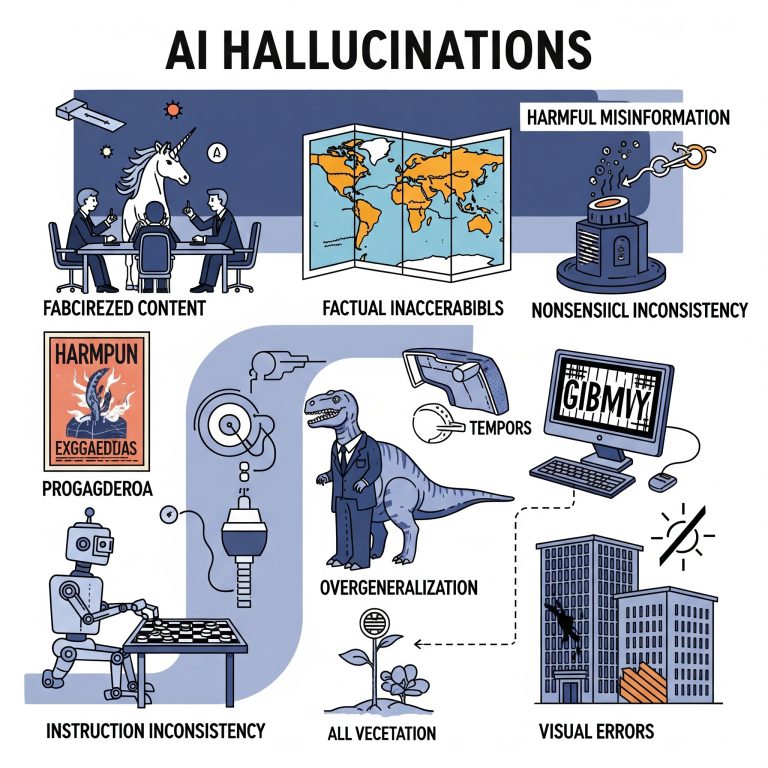

Hallucinations are when AI, especially large language models, generate false, misleading, or nonsensical information but present it confidently as fact. It happens because AI predicts the most probable next word based on patterns it learned, not because it “understands” the truth. Root causes of hallucinations include flawed/incomplete training data, the model’s probabilistic nature, and its lack of true common sense. An analysis and categorization of AI hallucinations helps understand the root cause as well as formulate effective mitigation strategies.

| Hallucination Type | Description | Chance of Occurrence | Consequences | Mitigation Steps |

| Factual Inaccuracies | AI generates information that sounds plausible but contains incorrect facts, dates, names, or figures. | Common (varies significantly by model and domain) | Misinformation, incorrect decision-making (e.g., financial, medical), and erosion of trust in AI’s reliability. | Implement Retrieval-Augmented Generation (RAG) to ground responses in external, verified knowledge bases; regular model updates with fresh, verified data; rigorous factual validation in post-processing. |

| Fabricated Content | AI invents entirely fictional entities (people, places), events, or sources (e.g., fake legal cases). | Variable (more likely with less represented knowledge) | Severe misinformation, legal liabilities (e.g., wrongful accusations), wasted time verifying non-existent information, and significant damage to reputation. | Enhance fact-checking mechanisms; use RAG for grounding; train models to express uncertainty when information is scarce; implement post-generation filters for consistency checks. |

| Harmful Misinformation | AI generates false information about real individuals or organizations that can cause reputational or personal harm. | Less frequent but highly impactful | Defamation, severe reputational damage, personal distress, potential legal action against AI developers or users, spread of dangerous narratives. | Robust content moderation and safety filters; fine-tuning with adversarial examples to identify and prevent harmful outputs; strong ethical guidelines in development; rapid human intervention for reported incidents. |

| Nonsensical Outputs | AI produces illogical, absurd, or completely irrelevant responses disconnected from the prompt. | Variable (often higher with unconstrained models) | Confusion for users, wasted time, diminished perception of AI’s utility, sometimes humorous, but can undermine serious applications. | Improve prompt engineering (clearer, more specific prompts); use temperature/sampling settings to reduce randomness; add explicit constraints in system prompts; train models on in-domain, coherent data. |

| Instruction Inconsistency | AI fails to follow explicit instructions provided in the prompt or contradicts information given to it. | Common (especially with complex or multi-step prompts) | Frustration for users, incorrect task execution, inefficient workflows, and AI acting contrary to its intended purpose (e.g., answering a question instead of translating it). | Unambiguous prompt phrasing; use delimiters to separate instructions from content; few-shot prompting with examples; adversarial testing to find and fix instruction-following failures. |

| Temporal Inconsistencies | AI mixes up timelines, dates, or chronological sequences of events in its generated response. | Variable (can occur in historical or sequential data tasks) | Misleading narratives, incorrect understanding of past events, and flawed planning based on incorrect timelines. | Use time-aware RAG systems; emphasize temporal reasoning in fine-tuning; explicitly provide chronological context in prompts. |

| Over-generalizations | AI provides overly broad or simplistic answers that lack necessary detail or make unwarranted assumptions. | Common (especially with models trained on diverse but shallow data) | Unhelpful responses, failure to provide actionable insights, potentially leading users to incomplete solutions or flawed understandings due to missing context. | Provide more specific examples during fine-tuning; use multi-turn dialogues to refine responses; implement mechanisms for AI to ask clarifying questions when it lacks specific detail. |

| Visual Hallucinations | (In image AI) Generated images contain illogical elements like distorted limbs, garbled text, or impossible objects. | Frequent (especially in early-stage or less refined image models) | Production of unusable or aesthetically displeasing content can undermine the credibility of AI-generated visuals, difficulty in creating realistic or accurate depictions. | Higher quality and more diverse training data for image generation models; adversarial training for specific visual artifacts; human-in-the-loop for quality control; post-processing filters to detect common visual anomalies. |

Notice the consistent theme of actions in the Mitigation Steps column:

- Rigorous factual data validation

- Enhanced fact-checking

- Data consistency checks

- Robust content moderation and review

- End-user reporting of hallucinations

- Increased education for end-users concerning prompt usage and prompt refinement

- Ensuring chronological context in data and prompts

- More diverse training data

All of these steps sound simple, but require a significant investment in human resources. Unfortunately, there is no simple mitigation process that will fit every enterprise that is leveraging AI. Engineers and executives, who own these AI systems, must determine which mitigation steps are appropriate for their environment.

Chances of Encountering

As of 2025, the prevalence of AI hallucinations varies significantly depending on the specific AI model, the complexity of the task, and the domain of knowledge being queried. There isn’t a single universal percentage, but rather a range that quantifies the chances of encountering AI hallucinations in 2025.

- For well-optimized, top-tier models on factual consistency tasks (e.g., summarizing short, factual documents):

- Some leading models, such as Google Gemini-2.0-Flash-001 and certain OpenAI o3-mini-high variants, report hallucination rates as low as 0.7% to 0.9%. This means for every 100 responses, you might encounter less than one hallucination.

- There are now four models with sub-1% hallucination rates, a significant milestone for trustworthiness.

- For general tasks and common models:

- Many widely used models fall into a “medium hallucination group” with rates typically between 2% and 5%. This implies you might see 2 to 5 hallucinations for every 100 interactions.

- Across all models, the average hallucination rate for general knowledge questions can be around 9.2%.

- For complex tasks, specialized domains, or less optimized models:

- Hallucination rates can climb significantly, ranging from 5% to almost 30% or even higher for certain models and types of queries.

- Domain-specific risks are higher:

- Legal Information: Average hallucination rates can be 6.4% even for top models, and up to 18.7% across all models.

- Medical/Healthcare: Average rates can be 4.3% for top models and up to 15.6% overall.

- Financial Data: Average rates can be 2.1% for top models and up to 13.8% overall.

- Scientific Research: Average rates can be 3.7% for top models and up to 16.9% overall.

- Newer “reasoning” models from some developers have even shown higher hallucination rates on specific benchmarks (e.g., OpenAI’s o3 and o4-mini hallucinated 33% and 48% respectively, on “PersonQA,” and even higher on “SimpleQA” tests), suggesting a potential trade-off between advanced reasoning and factual accuracy in some cases.

Implications

The implications for AI hallucinations are widespread, but the following general observations provide some insight:

- Ongoing Vigilance is Crucial: Despite improvements in some areas, AI hallucinations remain a persistent challenge.

- Time Spent Verifying: Knowledge workers reportedly spend an average of 4.3 hours per week fact-checking AI outputs.

- Impact on Enterprise: In 2024, 47% of enterprise AI users admitted to making at least one major business decision based on hallucinated content. In Q1 2025, 12,842 AI-generated articles were removed from online platforms due to hallucinated content.

- Industry Response: 76% of enterprises now include human-in-the-loop processes to catch hallucinations before deployment, and 39% of AI-powered customer service bots were pulled back or reworked due to hallucination-related errors in 2024.

Conclusions

In essence, while some leading models demonstrate impressive accuracy for straightforward tasks, users should still approach AI-generated content, especially for critical or complex information, with a critical eye and verify facts. The chance of encountering a hallucination, even a subtle one, is still present and varies significantly based on how and where the AI is being used.

Owners of enterprise AI systems must continue a vigilant focus on increasing the trustworthiness of their platform. Finally, AI platform owners must listen to their users, constantly drive improvement, and refine their processes as AI systems mature.

Sources

- “Google’s Gemini-2.0-Flash-001 is currently the most reliable LLM, with a hallucination rate of just 0.7% as of April 2025.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “There are now four models with sub-1% hallucination rates, a significant milestone for trustworthiness.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “Many models are showing hallucination rates of one to three percent.” – AI Models Are Hallucinating More (and It’s Not Clear Why) – Lifehacker

- “According to recent studies, even the most advanced AI models still have hallucination rates of approximately 3-5%.” – AI Hallucinations: The Real Reasons Explained (in 2025) – Descript

- “Average hallucination rate for general knowledge questions can be around 9.2%.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “Legal information suffers from a 6.4% hallucination rate even among top models, compared to just 0.8% for general knowledge questions.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “Medical/Healthcare: Average 4.3% for top models, 15.6% overall.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “Financial Data: Average 2.1% for top models, 13.8% overall.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “Scientific Research: Average 3.7% for top models, 16.9% overall.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com

- “OpenAI’s latest models (o3 and o4-mini) have hallucination rates ranging from 33% to 79%, depending on the type of question asked.” – Sources: AI is Getting Smarter, but Hallucinations Are Getting Worse – Techblog.comsoc.org

- “OpenAI’s research on their latest reasoning models, o3 and o4-mini, revealed hallucination rates of 33% and 48% respectively on the PersonQA benchmark, more than double that of the older o1 model.” – AI hallucinates more frequently the more advanced it gets. Is there any way of stopping it? – Livescience.com

- “Knowledge workers spend 4.3 hours per week verifying AI output.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com (citing Microsoft, 2025)

- “47% of enterprise AI users made at least one major decision based on hallucinated content.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com (citing Deloitte, 2025)

- “In just the first quarter of 2025, 12,842 AI-generated articles were removed from online platforms due to hallucinated content.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com (citing Content Authenticity Coalition, 2025)

- “39% of AI-powered customer service bots were pulled back or reworked due to hallucination-related errors.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com (citing Customer Experience Association, 2024)

- “76% of enterprises now include human-in-the-loop processes to catch hallucinations before deployment.” – AI Hallucination Report 2025: Which AI Hallucinates the Most? – AllAboutAI.com (citing IBM AI Adoption Index, 2025)