Navigating the EU AI Act in 2026

By: Chris Gaskins

Remember 2023? It was the year of the “AI Gold Rush.” Everyone was racing to integrate LLMs, launch chatbots, and automate everything. It was exciting, but it was also chaotic.

Fast forward to today, early 2026. The dust has settled, and the rules are officially in place. The European Union’s AI Act is no longer a looming threat; it is the blueprint for how AI is built and sold worldwide.

Whether you are a startup in Austin or an enterprise in Berlin, these rules affect you. If you want to touch the European market—the world’s largest single market—you play by these rules. This is the “Brussels Effect” in action.

Here is the plain-English breakdown of where we stand today and what you need to do next.

1. The Red Light: What’s Off the Table?

Status: Strictly Illegal since February 2025.

Last year, the EU drew a line in the sand. Certain uses of AI are deemed an “unacceptable risk” to human rights. If your company is still using these, you are facing the highest possible fines (up to 7% of global revenue).

- Social Scoring: No more ranking of citizens based on behavior or personality traits.

- Workplace Emotion Scanning: Using AI to “detect” if an employee is frustrated or happy in the office is banned (unless it’s for a very specific safety or medical reason).

- Subliminal Manipulation: You cannot use AI to influence people subconsciously to do things that might harm them.

2. The Yellow Light: The “CE Mark” for Software

Status: The “High-Risk” Deadline is August 2, 2026.

This is the most critical section for business leaders. If your AI helps make a decision about someone’s life, it is likely High-Risk. This includes AI for hiring (screening resumes), banking (credit scoring), or education (grading exams).

By this August, these systems must carry a CE Mark. You’ve seen this symbol on physical products like chargers or toys, but now it’s coming to your code.

What does it take to get a CE Mark for AI?

It’s not just a sticker. To legally “affix” this mark, a provider must complete a Conformity Assessment. This involves:

- The Technical File: A comprehensive document explaining exactly how the AI works, its limitations, and its logic.

- Data Governance: Proving that the data used to train the model was high-quality, representative, and checked for biases.

- Human Oversight: Designing the system so a human can “hit the brakes” at any time.

- Logging: The system must automatically record its own activity so that if something goes wrong, there’s an “audit trail.”

Pro Tip: If you buy your AI from a vendor, ask for their Declaration of Conformity. If they can’t provide it by August, you might be forced to turn the system off.

3. The Green Light: It’s Not Just “Anything Goes”

Status: Most rules for “Limited Risk” are active as of today.

Most AI—like your email spam filter, your video game AI, or your customer service chatbot—is considered “Minimal” or “Limited” risk. However, “Green” doesn’t mean “unregulated.”

Under the Act, even low-risk systems have Transparency Obligations:

- Disclosure: If a customer is talking to a chatbot, they must be told it’s a machine. No more pretending the bot is a human named “Steve.”

- Deepfake Labeling: If your AI generates or modifies photos, videos, or audio that look real, they must be watermarked or labeled.

- AI-Generated Text: If you use AI to write public-facing content about matters of “public interest” (like news or policy), you must disclose that it was AI-assisted.

The “Voluntary Code of Conduct”:

Many smart companies are going beyond the minimum. The EU encourages businesses to sign onto Voluntary Codes of Conduct. By following high-risk standards even when they don’t have to, companies are using compliance as a marketing tool—telling customers, “You can trust our AI because we follow the highest safety standards in the world.”

4. The General Purpose AI (GPAI) Clause

Status: Rules for models like ChatGPT/Gemini/Claude were enforced August 2025.

If you build the “foundational” models that other people use, you have extra homework. You have to respect EU copyright law (no training on protected data without permission) and provide summaries of what data you used to train the machine. If the model is exceptionally powerful, it’s tagged as having Systemic Risk, requiring intense “red-teaming” or stress-testing.

At-a-Glance: Comparing the Categories

| Risk Tier | Examples | Key Requirement | Status / Deadline |

| 🔴 Red | Social scoring, workplace emotion detection | Prohibited | Banned Feb 2025 |

| 🟡 Yellow | Hiring, credit scoring, healthcare tools | CE Mark & Audit | Due Aug 2026 |

| 🟢 Green | Chatbots, spam filters, AI-gen images | Transparency & Labels | Active Now |

| 🌐 GPAI | Large Language Models (LLMs) | Copyright & Tech Docs | Active Aug 2025 |

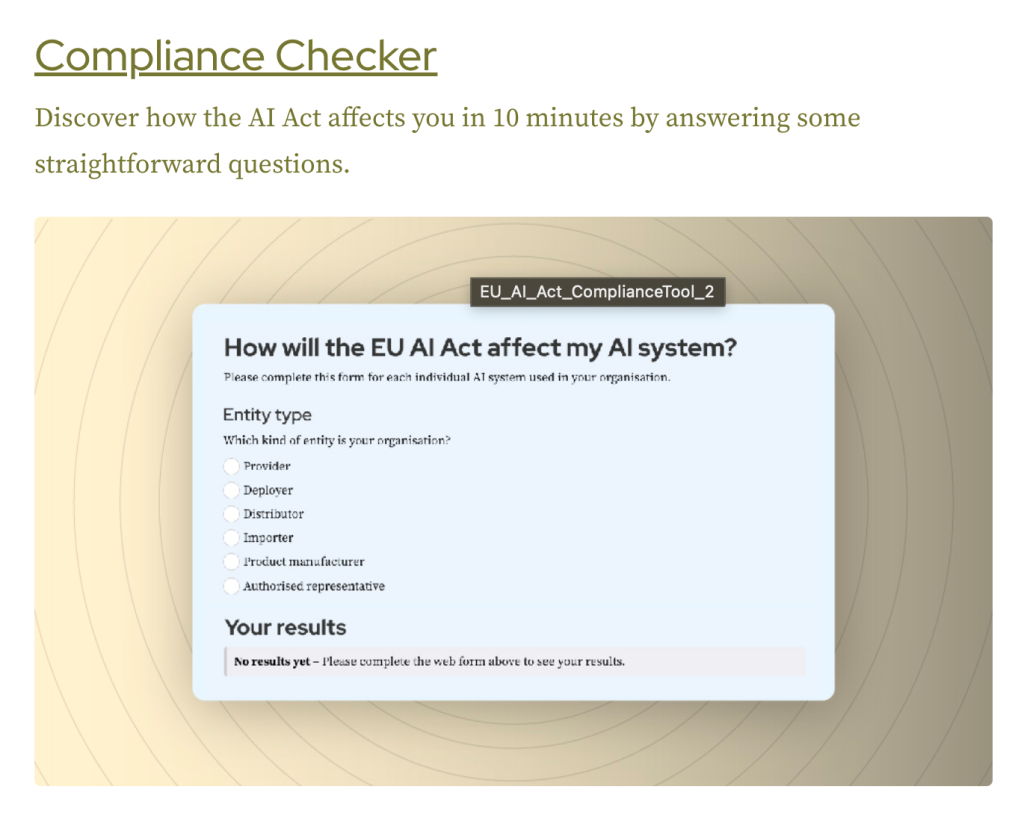

The Official Shortcut: The EU Compliance Checker

Confused about where your product fits? You don’t have to guess. The European AI Office provides an official EU AI Act Compliance Checker.

It’s an interactive tool that walks you through a series of questions about your AI’s intended use and provides a preliminary report on your risk category and legal obligations. Think of it as ‘Step Zero’ before you dive into a full audit.

Your 2026 Executive Action Plan

The following steps apply to every business leader, whether you build AI or just use it. You can’t comply with the rules above if you don’t know where the AI is hiding in your organization.

- Conduct an AI Audit (Inventory): Ask every department head to list the software they use. You might be surprised to find your HR team is using an AI “resume sorter” (High Risk) or your marketing team is using AI “deepfake” tools for ads (Transparency Rules). You can’t manage what you haven’t mapped.

- Establish an AI “Usage Policy”: Create a clear document for employees. Which tools are “Green Light” (approved)? Which tools require a “Yellow Light” review before being used for customer data?

- Check Your Vendor Roadmap: For every “High Risk” software you pay for (Hiring, Finance, Healthcare), demand a written statement from the vendor. Ask: “Will your tool be CE Mark compliant by August 2026?”

- Add “Bot Disclosures” to Your UI: If you have a customer-facing chatbot or AI-generated support articles, add a “Powered by AI” badge today. It’s an easy way to satisfy the transparency rules.

- Assign an AI Compliance Lead: This doesn’t have to be a new hire, but one person (often the CTO or a Legal Lead) needs to be responsible for tracking these deadlines so your company doesn’t get hit with a surprise fine.

The AI Act isn’t here to kill innovation; it’s here to build trust. In 2026, the companies that win will be the ones that prove they are not only fast but also responsible.