To MCP or Not to MCP – That is the Question

By: Dominick Romano and Chris Gaskins

If you are involved with your company’s Enterprise AI system, you are most likely exploring how to leverage the new Model Context Protocol (MCP). For those who are unfamiliar, MCP serves as an open standard, providing a uniform interface for AI models to interact with external data sources, tools, and services. Its core purpose is to standardize the communication layer between AI applications (clients/hosts) and diverse external systems (servers) where enterprise-specific data resides. The goal of this standardization is to eliminate the need for bespoke integrations, allowing AI models to discover, inspect, and invoke functions, retrieve data, and access predefined prompts from these external systems without requiring prior, unique configurations. So far, so good, right? If MCP works as advertised, it should be a huge boost to Enterprise AI systems.

Now let’s dive into how MCP works. Architecturally, MCP operates on a client-server model, where AI applications initiate requests and external systems provide the requested resources or execute specified operations. This framework facilitates enhanced contextual understanding for the AI system by providing seamless access to real-time or specialized information.

Explanation of MCP Components:

- AI Application (Client/Host): This represents the AI system that wants to interact with external resources. It could be a Large Language Model (LLM), an autonomous AI agent, a conversational AI system, or any other AI application.

- MCP Client Implementation: This is the software component within the AI application that handles the communication according to the MCP standard. It sends requests to MCP servers and processes their responses.

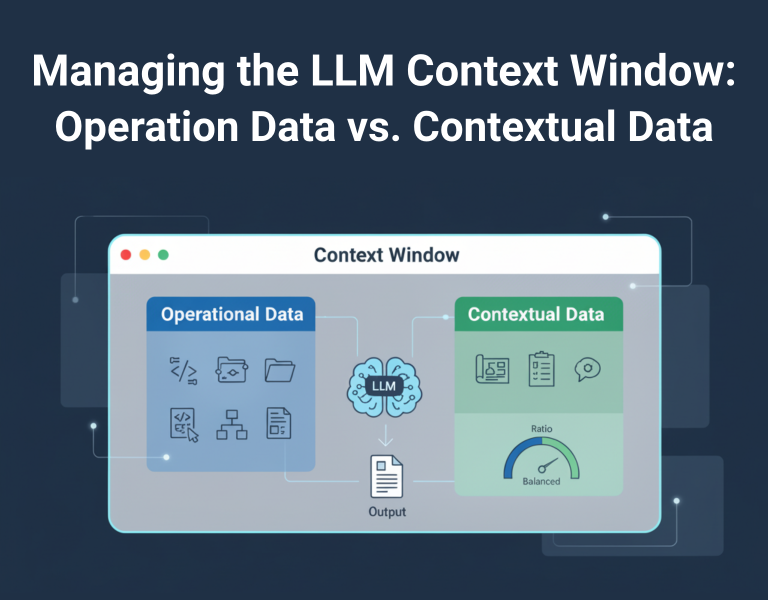

- Large Language Model (LLM) or other AI Model: This is the core AI model that leverages the information and capabilities exposed through MCP. It receives data from the MCP client and sends requests for information or actions through it.

- External System (Server): This represents any external system that wants to expose its data, functions, or prompts to AI models via MCP.

- MCP Server Implementation: This is the software component within the external system that listens for MCP requests from clients, processes them, and returns the appropriate responses. It acts as the interface for the external system.

- Data Sources: These are databases, APIs, file systems, or any other repositories of information that the external system can access and expose to the AI model.

- Tools/Services: These are external functionalities, APIs, or web services that the AI model can invoke through the MCP server (e.g., calendar services, e-commerce APIs, data analysis tools).

- Predefined Prompts: These are structured pieces of information or instructions that can be provided to the AI model as context, pre-formatted for specific tasks or domains.

MCP Flow of Interaction:

- The AI Application (specifically, the LLM or AI Model) identifies a need for external information or functionality to complete a task.

- The MCP Client Implementation within the AI application formulates a request based on the MCP standard (e.g., “discover available functions,” “get data from a specific source,” “invoke a tool with these parameters”).

- This request is sent over a standardized communication protocol (like gRPC or REST) to the MCP Server Implementation of the relevant External System.

- The MCP Server Implementation processes the request. It might:

- Query Data Sources to retrieve specific data.

- Invoke Tools/Services to perform actions.

- Retrieve Predefined Prompts to provide specific context.

- The MCP Server Implementation then formats the response according to the MCP standard.

- The response is sent back to the MCP Client Implementation.

- The MCP Client Implementation provides the retrieved data, tool output, or contextual information to the LLM or AI Model, enabling it to continue its task with enhanced understanding and capability.

This architecture is designed to enable a standardized, discoverable, and efficient way for AI systems to interact with the vast ecosystem of digital information and services.

On the surface, the architecture and functionality appear to be sound and familiar to other client/server integration frameworks. Unfortunately, this is where the “goodness” ends. The most significant issue with MCP is security vulnerabilities. A quick search will easily produce more articles about the security shortcomings of MCP than you probably care to read. If you are not familiar with these issues, review the following articles:

- The Security Risks of Model Context Protocol

- The “S” in MCP: A Dark Tour of Model Context Protocol Security

- MCP Servers: The New Security Nightmare

In summary, MCP lacks the following:

- A standard authentication system

- Encrypted communication between components

- Integrity verification for tools or servers

- Complete transparency into the model’s instructions and tools

These four key issues should make you question the safety of MCP in your Enterprise AI solution. Now, we are down to the crux of the matter. Responsible engineers and engineering leadership can’t ignore these security issues. So, what is the path forward for Enterprise AI systems and MCP?

The right answer is to maintain a relentless focus on security and trustworthiness, regardless of the hype surrounding MCP. Much like the construction industry catch-phrase “Safety First”, Enterprise AI owners should adhere to “Trustworthiness and Security”. Without trust and security, your AI system is questionable and potentially harmful, which causes the company to incur significant risk. So all decisions concerning an Enterprise AI system should be focused around the concepts of “Trustworthiness and Security”. Given this approach, there are only two paths forward for integrating with Enterprise AI systems:

- Continue with custom integrations that do not conform to MCP

- Use MCP in controlled, scope-limited scenarios that mitigate the security issues

Remember, before the existence of MCP, integrations were all custom. Enterprise AI systems that require integration should continue with custom integrations, where they can fully control the scope, access control, and authentication mechanisms. If you are building new integration APIs, leveraging some of the MCP concepts is smart, allowing you to more easily adapt to MCP in the future when the security concerns are resolved.

If you are going to use MCP, use it in controlled and scope-limited scenarios. The following security precautions should be taken:

- Rigorously verify all inputs and outputs – trust nothing and verify everything

- Implement a tiered access control system where you can apply the principle of least privilege access.

- Use containers and application sandboxes to minimize access and proactively guard against unauthorized behaviors such as unintentionally transferring data to external systems.

- Destructive or highly sensitive operations should be properly restricted and possibly require human approval.

- Provide complete visibility and openness as to the operations that are available and being used by the AI system.

- Thoroughly inspect and test open-source servers and libraries that are incorporated into your AI system.

- Ensure any systems that access local files are not able to send those files to external services outside of the user access boundary of the given system.

- Although MCPs lack standard authentication protocols, this does not prevent companies from wrapping MCP with authentication protocols to ensure secure transmission of data; however, this always falls back to the support of the model and/or provider used.

To conclude, while MCP can make life easier, it also presents numerous cybersecurity issues. There are safe ways to deploy MCP, and there are unsafe ways to deploy MCP. It’s always best to identify the use case, determine the risk, and mitigate the security concerns up front, before a potential breach of sensitive information.