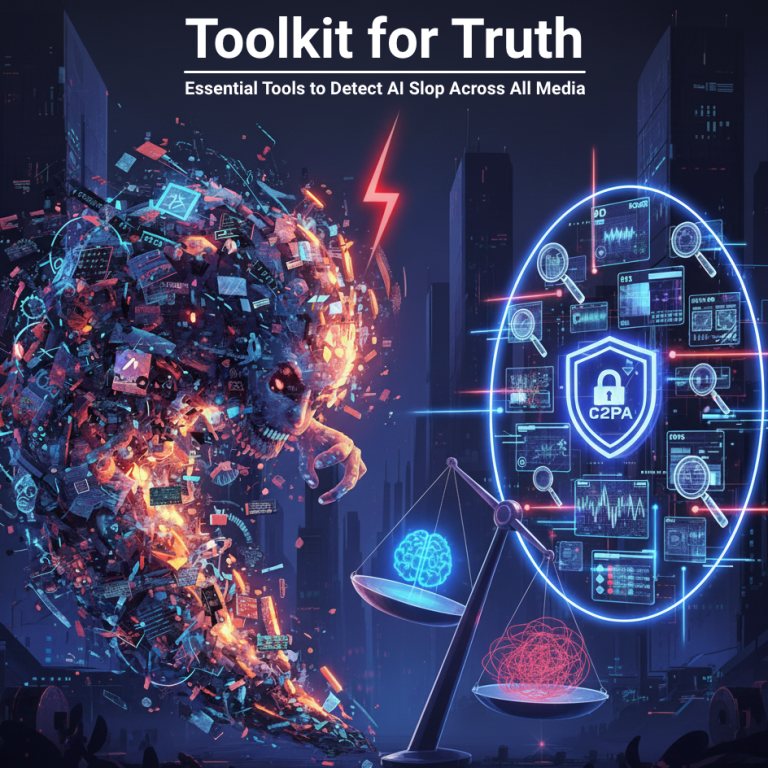

The Toolkit for Truth: Essential Tools to Detect AI Slop Across All Media

By: Chris Gaskins and Dominick Romano

I. Introduction: The Detection Arms Race

In the era of AI Slop—that overwhelming flood of low-quality, high-volume content—the ability to distinguish machines from humans is no longer a luxury, but a core digital skill. As we highlighted in our previous piece, “The Digital Deluge: Understanding, Spotting, and Surviving the Era of AI Slop”, the sheer quantity of AI-generated content is now drowning out genuine, high-effort work, eroding public trust, and polluting search results.

How do we move beyond simply spotting the telltale signs, like six-fingered hands or “buzzword salad,” and deploy technical solutions?

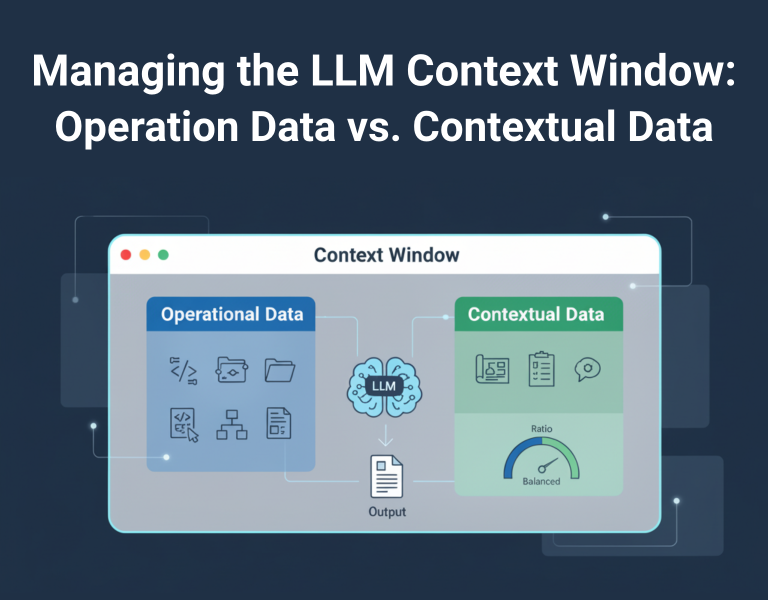

The race between AI generation and AI detection is a constant, evolving arms race. Because of this, effective defense requires understanding two core approaches to identifying synthetic media:

- Forensic Detection (Analysis): These tools analyze the output itself—the text, the image pixels, or the audio file—looking for statistical patterns, linguistic predictability, or subtle digital artifacts that betray a machine’s origin.

- Provenance (Watermarking): This is the gold standard for future trust. These tools don’t analyze the content after it’s created, but instead check for an embedded digital signature (a watermark or metadata) that was placed at the moment the content was first created.

The most robust strategy combines both. Below is a comprehensive guide to the essential tools, categorized by the type of content they detect, along with their key features and pricing models.

II. Forensic Detection Tools: Analyzing the Artifacts

Forensic tools analyze the final product for imperfections and statistical anomalies, specifically looking for telltale signs that indicate machine origination.

1. Text Content Detection (LLM Output)

These tools use natural language processing (NLP) to analyze statistical markers that differentiate machine language (which tends to be highly predictable and structured) from human language (which is more varied, or “bursty”).

| Tool | Focus/Best For | Pricing Model | Website URL |

| GPTZero | Education, Writers, General Use | Freemium | https://gptzero.me/ |

| Copyleaks | High-Accuracy, Multi-Language | Freemium | https://copyleaks.com/ |

| QuillBot AI Detector | Integrated Writing Suites | Freemium | https://quillbot.com/ai-content-detector |

| Originality.ai | SEO, Publishers, Content Agencies | Paid | https://originality.ai/ |

| Grammarly AI Detector | Integrated Writing Feedback | Freemium | https://www.grammarly.com/ |

Key Concept: The Perplexity/Burstiness Check

Text detectors analyze patterns like perplexity (how predictable the next word is) and burstiness (the natural variation in sentence length and structure). Since AI typically favors high predictability and uniform structure, low scores on these metrics are the telltale sign that the content is “too perfect” and machine-generated.

Limitations of Forensic Text Detection

While these tools provide valuable probability scores, they are not infallible and suffer from several key limitations:

- Lagging Technology: AI models are constantly and rapidly updated to sound more human, often outpacing the ability of detection tools to keep up. This means detectors are perpetually playing catch-up, leading to diminishing accuracy over time.

- False Positives: A serious drawback is the rate of false positives. Highly formulaic writing—such as dense academic papers, legal documents, or writing by non-native English speakers (ESL)—often exhibits the same structural consistency as AI, leading detectors to incorrectly flag authentic human work.

- Evasion Tools: The market now features “AI humanizer” tools designed specifically to rewrite AI-generated text to increase perplexity and burstiness, effectively shielding the content from detectors while requiring minimal human effort.

- Difficulty with Mixed Content: Most detectors struggle to accurately score documents where human writers have heavily edited, fact-checked, or integrated only small sections of AI-generated content into a larger human-written piece.

Beyond the Score: Interpreting Detector Results

Understanding these limitations is why we must treat a high detection score not as a verdict, but as a risk indicator. When a detector flags content, the following actions must be taken:

- Manual Fact-Check: Immediately verify every technical claim, statistic, or named source. AI Slop’s core sin is often factual hallucination.

- Quality Assessment: If the detector flags sections for high predictability and low variation, this is a strong indicator of low-effort “slop.” Treat this as a failure of quality and insight; the content is generic filler and should be rejected or heavily revised by a human expert.

- Confirm the Source: If the content is from an unknown external writer or a non-credentialed platform, treat the high score as a hard fail. If the content is from a trusted author, use the results to prompt a discussion about their workflow and need for self-editing.

The fragility of forensic detection for text is precisely why the industry is moving rapidly toward Provenance tools as the superior long-term solution.

2. Multimedia Detection (Image, Video, and Audio)

Visual and auditory forensics examine pixel data, lighting consistency, and sound profiles to find AI-specific glitches and tendencies.

| Tool | Content Detected | Pricing Model | Website URL |

| AI or Not | Image, Video, Audio, Text | Freemium | https://www.aiornot.com/ |

| Hive Moderation | Image, Video, Audio, Text (API) | Paid | https://hivemoderation.com/ |

| Sightengine | Image, Video, Text | Freemium | https://sightengine.com/ |

Key Concept: Artifact Analysis

For visual media, detectors look for pixel-level inconsistencies—subtle distortions or patterns that appear when a diffusion model renders an image or video frame. For audio, they analyze the spectrogram (the visual representation of sound frequency over time) for telltale synthetic flatness or unnatural transitions that a human voice cannot replicate.

Limitations of Multimedia Detection

While these tools are highly advanced, their limitations pose significant challenges, particularly as generative models like Midjourney V6+ and OpenAI’s Sora improve in realism:

- Evolving Realism: Newer AI models have drastically reduced the most obvious artifacts (like melted hands or inconsistent physics), making forensic detection harder with every generation.

- Simple Tampering: Even simple post-processing steps—such as applying a strong Instagram filter, aggressive cropping, or running the image through compression/lossy formats—can often confuse or completely defeat artifact-based detectors.

- Metadata Stripping: When multimedia files are uploaded to social media platforms (TikTok, X, Instagram), the platform automatically strips out most forensic metadata, removing key clues about the file’s origin before a consumer can check it.

- Proprietary Focus: Many commercial tools are highly tuned to detect output from specific major AI models. If a piece of slop is created using a new or obscure open-source model, the detection rate can drop sharply.

Beyond the Score: Interpreting Multimedia Results

When forensic tools return a high-risk score for an image, video, or audio file, the steps are slightly different, focusing heavily on human review and provenance:

- Immediate Human Review: A high score should immediately trigger mandatory review by a human moderator or editor. The score should guide the reviewer to look for subtle signs: unusual shadows, flickering pixels, or unnatural eye movement that the AI flagged.

- Cross-Reference Manual Checks: Compare the forensic score with the manual red flags identified in our previous article (e.g., anatomical artifacts, garbled text, or uncanny valley texture). If both the human eye and the tool are suspicious, the trust level drops to zero.

- Mandatory Provenance Check: The most important step for multimedia is to immediately pivot to Provenance tools (Section III). Since forensic clues can sometimes be unreliable, the only reliable proof of authenticity or AI origin is an embedded, unforgeable digital signature.

III. Provenance Tools: The Future of Trust

Provenance is simply the verifiable history and origin of a digital asset. Unlike forensic detection, which searches for clues after the fact, the provenance process proactively embeds a secure, digital signature into the content the moment it’s created.

Provenance Scope: Provenance is for all content, not just AI. This is a universal method for authenticating all digital assets, regardless of whether they were created by a human with a traditional camera or an AI model. AI is treated simply as one more tool whose use must be clearly recorded and disclosed in the content’s verifiable history.

Why Provenance is Superior: It’s considered the gold standard because it’s proactive and permanent. The digital signature is embedded directly into the file, making it extremely difficult to fake.

How Provenance Works (The Three Steps)

The entire process is anchored by the Content Credentials standard defined by the Coalition for Content Provenance and Authenticity (C2PA).

- Creation (Signing): When content is created (whether by a camera, a recording device, or an AI generator), the generating software or device cryptographically signs the file. This signature includes assertions—verifiable data about who, when, and how the file was made.

- Editing (Logging): If the content is edited, the editing software (e.g., Adobe Express) logs the changes made and re-signs the file, creating a tamper-proof chain of custody. Any non-C2PA compliant edit (a tool that doesn’t re-sign the file) instantly breaks this chain.

- Verification (Checking): A third-party tool, like the C2PA Verify Tool, checks the digital signature. If the signature is valid, the history is guaranteed to be authentic. If the chain is broken, the content is flagged as tampered or suspicious.

| Tool/Standard | Content Verified | Pricing Model | Website URL |

| C2PA Verify Tool | Image, Video, Audio (Metadata) | Free Verification | https://verify.contentauthenticity.org/ |

| Google SynthID Detector | Image, Video, Audio, Text (Watermark) | Free Verification | https://deepmind.google/models/synthid/ |

The Industry Standard

While C2PA and SynthID are the most widely adopted solutions, the provenance landscape includes other efforts, such as Digimarc’s Validate platform (which uses durable watermarking for commercial authentication) and Meta Video Seal (an open-source watermarking effort focused on video integrity). It is the C2PA, however, that has solidified its position as the industry standard, with its steering committee including nearly every major platform, media outlet, and tech company (e.g., Google, Amazon, Meta, OpenAI, Adobe, Microsoft, and Sony). The full list of organizations committed to this effort can be viewed on the C2PA Membership page. This broad coalition is why C2PA is the most critical standard for long-term content integrity.

IV. Conclusion: The Hybrid Defense

No single tool is a silver bullet against evolving AI technology. The most effective defense for consumers, creators, and businesses is a hybrid strategy:

- Start with Manual Skepticism: Apply the five manual spotting techniques from the first article. If it looks like AI slop, don’t engage.

- Forensic Check (Text): Use LLM detection tools as a risk flag. Treat a high score not as a final judgment, but as a warning of high predictability and low value.

- Prioritize Provenance (Multimedia): When evaluating critical visual media, actively use the C2PA Verify Tool or SynthID Detector. If the content has valid Content Credentials, take the critical step of verifying the signer’s identity and the listed creation history. If content lacks this embedded history in a world where it is becoming standard, treat it with high skepticism.

- Verify the Source’s Credibility: If any technical step (forensic score or provenance signature) or manual quality check is questionable, your final step must be to verify the publisher’s or creator’s reputation. Ask: Is the content published by a reputable news organization or an established industry expert? Trust the content only if you trust the signer or the organization behind it.