The Digital Deluge: Understanding, Spotting, and Surviving the Era of AI Slop

By: Chris Gaskins and Dominick Romano

Introduction

In a short time, artificial intelligence has gone from a niche tech novelty to a technology that is suddenly everywhere, promising to change how we create, work, and communicate. But alongside all these exciting innovations, a less desirable byproduct is quickly flooding our digital lives: AI Slop. This is the popular term for the huge amount of low-quality, quickly made content—whether it’s clumsy text, strange images, or robotic videos—that generative AI tools create with minimal human oversight. This slop isn’t harmless; it’s making it harder to trust what we see online, clogging up our information feeds, and devaluing the genuine human creativity that AI was supposed to help. To navigate this new digital frontier and keep your information reliable, you need to understand what AI slop is, how to spot it, and why it’s a problem that affects us all.

The Definition and Origin Story

The term “AI Slop” is highly descriptive, comparing this digital content to cheap, unappetizing animal feed or a general mess. At its core, AI slop is any piece of digital media (text, image, audio, or video) that prioritizes speed and quantity over substance and quality.

The controversy is fundamentally about effort. If a human uses a powerful AI tool to generate content but skips the necessary steps of editing, fact-checking, humanizing the voice, or adding original insight, the resulting output is often labeled slop. The content that looks passable on a screen but provides no unique value, is often factually inaccurate, or simply reads as generic.

The term itself emerged as internet slang around 2022, primarily in reaction to the rapid release of powerful, publicly accessible generative AI models like DALL-E and the first large language models (LLMs). Online communities quickly recognized the influx of mass-produced, often bizarre or poorly detailed images and robotic text, giving rise to names like “AI garbage” or “AI pollution” before “slop” became the widely adopted favorite. This linguistic shift marked a recognition that the internet wasn’t just getting more content; it was getting cluttered by an overwhelming volume of low-grade filler.

A Flood by the Numbers: The True Scale of AI Content

The feeling that the internet is being buried under a mountain of new, low-quality content isn’t just in your head. The “slop” phenomenon, once a niche complaint, is now a statistically verifiable deluge. Recent data quantifies the sheer scale of this new reality, showing just how fast the floodwaters have risen.

A late 2025 study from the SEO firm Graphite, for instance, revealed a staggering milestone: AI-generated articles now account for 52% of all new written content on the internet. For the first time, machine-generated articles outnumber human-written ones in terms of sheer publishing volume.

This trend shows an exponential explosion.

- Before November 2022 (the launch of ChatGPT), AI-generated articles were a minor factor, making up less than 10% of new content.

- By late 2023, just one year later, that number had already surged to 39% of all new articles published.

However, this data also reveals a crucial distinction that gets to the very heart of “slop”: volume does not equal value. The same study found that despite this 52% majority in volume, AI-generated content makes up only 14% of the top-ranking pages in Google Search. An overwhelming 86% of the content that search engines deem valuable and authoritative is still human-written. This confirms the core premise of slop: a massive quantity of low-value, automated “filler” is being created, but it is largely failing to provide the quality that users and search engines seek.

This content explosion isn’t limited to text. A New Visual Deluge: It’s estimated that 34 million new AI images are created daily. Since 2022, over 15 billion AI-generated images have been created —a scale of creation that took traditional photography 150 years to achieve.

These numbers prove that the digital deluge is real. The internet isn’t just getting more crowded; it’s being flooded with automated content. To navigate this new environment, being able to identify the slop in your own feed is no longer an optional skill—it’s a necessary defense.

Key Characteristics: How to Spot the Slop

When you’re scrolling through your feed, how can you tell the difference between human-edited, quality content and AI slop? While detection methods are constantly evolving, AI-generated content often leaves behind distinct, predictable fingerprints.

Telltale Signs in Text

For written content, the issue isn’t grammar (AI is great at grammar) but rather style and substance. Look out for these red flags:

- The Buzzword Salad: Overuse of generic, formal, or cliché phrases that sound impressive but add no real meaning. Example: Passages riddled with terms like “in today’s rapidly evolving landscape” or “seamlessly elevate user experience.”

- Formulaic Structure: Content that follows a painfully predictable rhythm. Every paragraph has a similar length, sections are perfectly balanced regardless of importance, and introductory sentences often state the obvious. Example: The excessive use of phrases like “It is important to note that…” to pad word count.

- “Cohesion Without Coherence”: The writing flows smoothly, but the overall message is repetitive or lacks depth, failing to provide unique insights, expert opinion, or actionable advice. Example: A five-paragraph article that uses perfect transitions (cohesion) but ends up saying nothing new or repeating the same basic facts (no coherence).

- Keyword Cramming: Noticeable attempts to awkwardly force SEO keywords into sentences, making the text read unnaturally or robotically. Example: A product description that reads: “Our fitness supplements boost energy. These fitness supplements help performance. Find the best fitness supplements for every fitness goal.”

- Unearned Neutrality: The text summarizes facts but avoids taking a stand, offering no opinion or genuine argument—a reflection of the model’s desire to remain safe and neutral. Example: An analysis of a controversial political topic that only describes a variety of possible perspectives without ever committing to or defending a primary position.

Visual and Multimedia Red Flags

Images and videos created with AI often contain strange imperfections, especially around complex areas:

- Anatomical Artifacts: The classic giveaway. Look for extra or missing fingers, melted or oddly shaped hands, mismatched eyes, or distorted limbs. Example: The famous early DALL-E and Midjourney images featuring people with six-fingered or fused hands, or a character whose arm is bending at an impossible angle.

- Inconsistent Physics: In videos, objects may disappear or reappear, shadows may be inconsistent with the lighting, or motions may defy the laws of gravity in subtle ways. Example: A ball that rolls off a table but falls in an unnatural, floating trajectory, or reflections on a surface that flicker and break as the camera moves.

- Mangling of Text and Logos: AI often struggles to render legible words, leading to garbled letters or surreal, nonsensical signage within the image. Example: Street signs, newspaper headlines, or product logos that look like text at first glance but dissolve into indecipherable “alien” gibberish upon closer inspection.

- The Uncanny Valley Look: Images or human figures that appear hyper-realistic but have a subtle, unsettlingly “smooth” or “waxy” texture, lacking the imperfections of real life. Example: A close-up portrait where the skin looks too flawless, or a face with perfectly symmetrical features that somehow appear lifeless or doll-like.

- Robotic Narration: For audio and video, listen for flat, emotionless text-to-speech voices that lack natural human pauses, tone, and pacing. Example: The viral YouTube shorts featuring AI-generated content (like “cat soap operas” or motivational clips) that use unfeeling, monotone narration over bizarre visuals to maximize content volume.

The Negative Impact and Controversy

Now that we know how to spot AI slop, it’s time to ask the crucial question: why should we care? The answer is simple: this cheap, endlessly duplicated content isn’t just a nuisance; it’s a pollution event. Its volume is breaking the core systems we rely on—from search engines to social trust—and creating massive headaches for creators and consumers alike.

1. Erosion of Trust and Information Integrity

When people are constantly exposed to content that is plausible yet often fake, they become skeptical of everything they see online.

- The Credibility Crisis: The flood of synthetic content blurs the line between human-vetted information and AI mimicry. Studies show that merely knowing AI was involved in creating a news story can cause people to trust the source less, even if the content is accurate.

- Misinformation Amplification: Slop is often inaccurate due to technical flaws (known as hallucinations), not malicious intent. However, this carelessness spreads false information at massive scale and speed, making it harder to discern reliable facts. Example: AI-generated images of a little girl and a puppy circulating after Hurricane Helene were used to spread misinformation about disaster relief efforts.

- The Unintentional Lie: Unlike intentional deepfakes, AI slop spreads falsehoods through indifference and automation. This “careless speech” is difficult to fight because it has no political agenda—it’s just wrong.

2. Search Engine Degradation and “Content Collapse”

Search engines are the primary way people find information, and they are struggling to cope with the sheer volume of AI spam.

- Ranking Pollution: SEO content farms use AI to mass-produce thousands of low-value articles designed to game search algorithms. This clutter pushes high-quality, human-created content down the rankings, making the web a less useful tool.

- The Model Collapse Loop: A particularly insidious threat is data poisoning. As the web becomes saturated with AI slop, new AI models are trained on this low-quality, synthetic data. The AI essentially learns from copies of copies, leading to a steady degradation in the quality and factual grounding of all future AI systems.

- Wasted Ad Dollars: For advertisers, the slop flood means their money is often spent running ads next to low-quality, irrelevant, or even harmful auto-generated content, inflating metrics without driving genuine engagement.

3. Devaluation of Human Creativity and Effort

The ease and speed of AI generation have created an economic headwind against human professionals.

- The Race to the Bottom: Why pay a writer, artist, or photographer when you can generate thousands of functional, if generic, pieces of content instantly for almost free? This has led to a major outcry from the creative communities who feel their craft is being devalued.

- Algorithmic Bias: Platforms often reward content volume and engagement above all else. Since AI can generate volume faster than any human, the algorithms often promote the slop, effectively drowning out nuanced, deeply researched, or emotionally complex human work.

Digital Defense: How to Fight the Slop

The solution to AI slop isn’t to stop using AI; it’s to start demanding and creating better content. By changing how you consume information and how you integrate AI into your own work, you can become part of the solution.

For Consumers: Changing Your Consumption Habits

Fighting slop starts with being a smarter, more deliberate reader and viewer. The best defense is zero engagement once you’ve identified a piece of slop.

- Hard Swipe/Immediate Close: If you see clear signs of AI slop, do not pause or click. Don’t give the algorithm any signal that the content held your attention. The fastest, lowest-interaction defense is to immediately close the page or scroll past the content.

- Use “Not Interested” Features: On platforms like YouTube and Instagram, actively use the “Not Interested” or “Don’t Show Me This” button. This sends a direct, strong signal to the algorithm to suppress content from that source or type.

- Verify the Claim, Not the Link (Crucial): If you see a suspicious headline or shocking claim, resist the urge to click the source link. Action: Instead, copy a few unique keywords from the headline or excerpt and paste them into a search engine. Then, deliberately click on a known, credible news site or academic source to verify the information. Example: Instead of clicking the link for “New AI-Invented Disease Discovered,” search Google for: “AI-Invented Disease” and see if sources like the CDC or a reputable news agency report it.

- Reward Quality with Engagement (The Right Way): When you encounter high-quality content clearly made with human effort, reward it financially or with high-value interaction. Action: Leave a detailed, thoughtful comment (which signals high engagement) or, if offered, subscribe to the newsletter or follow the creator on a secondary platform. Simple, thoughtless likes and shares are ignored; conscious financial support or detailed discussion is the ultimate way to reinforce human-first content.

- Verify the Origin: Seek out authors who disclose their sources and methods. For images, check the file’s metadata for an AI-generated tag using tools that adhere to the Content Provenance and Authenticity (C2PA) standard. Tools: You can use the Content Authenticity Initiative Verify tool to check an image file’s metadata. If you can’t trace the content back to a credible human or organization, treat it as slop.

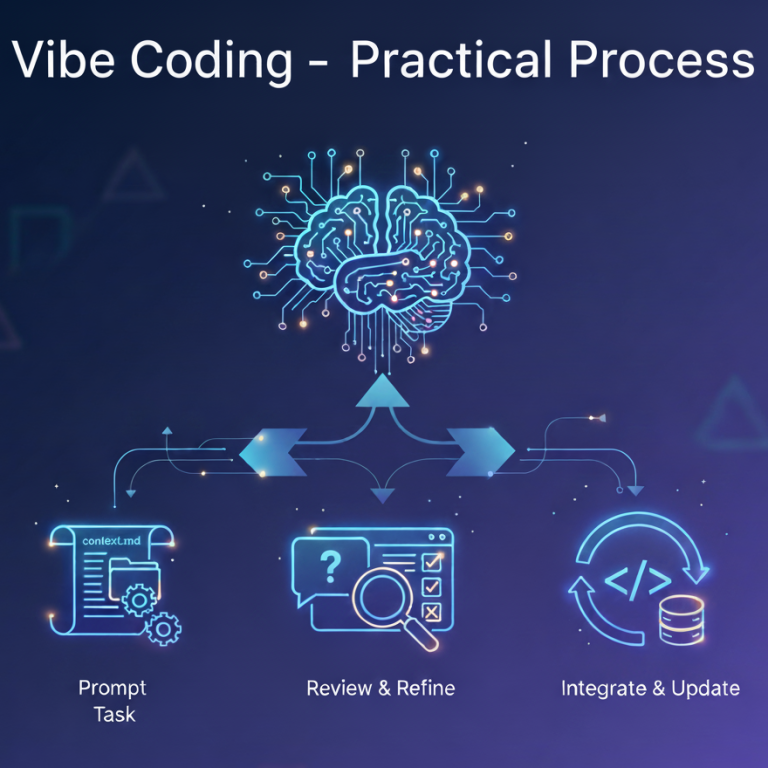

For Creators: Using AI as a Tool, Not a Replacement

If you are using AI, the key is to ensure your work avoids becoming slop by applying human-first standards:

- Edit Like Your Job Depends On It: Treat the AI output as a highly efficient first draft that is factually suspect and stylistically bland. Dedicate 90% of your time to manual refinement: Fact-check every claim, rewrite formulaic phrases, and re-structure the flow to prioritize impact over predictable rhythm.

- Inject Experience and Authority: The value you add is what the AI cannot synthesize. Action: Integrate original research, case studies with unique data, direct quotes from interviews, or personal anecdotes that only you have lived. These elements cannot be faked and instantly establish credibility.

- Focus on Clarity, Not Clichés: Actively scrub your AI drafts for those buzzword crutches we identified. Action: Eliminate verbose and empty phrases like “in the realm of,” “it is important to note,” and “seamlessly elevate.” Be direct. If a sentence doesn’t advance the idea, cut it.

- Be Transparent, Build Trust: Disclosing your AI use isn’t a weakness; it’s a necessary step to build audience trust in an era of digital confusion. Action: Include a simple, honest disclosure at the end of the content, like: “This article was drafted and researched using AI assistance, but all claims have been human-verified and edited for original insight.”

The Final Verdict: A Call to Digital Defense

The bottom line is this: AI slop isn’t a technical glitch; it’s a huge surge of digital pollution caused by a failure of effort. It proves that simply prioritizing speed and automation doesn’t lead to progress—it just makes a mess. The integrity of your news feed, your search results, and your trust in what you see online is now a personal choice. By becoming vigilant consumers who actively deny the garbage engagement and deliberate creators who commit to authentic human oversight, we can collectively starve the slop machine.