Managing the LLM Context Window: Operational Data vs. Contextual Data

By: Dominick Romano

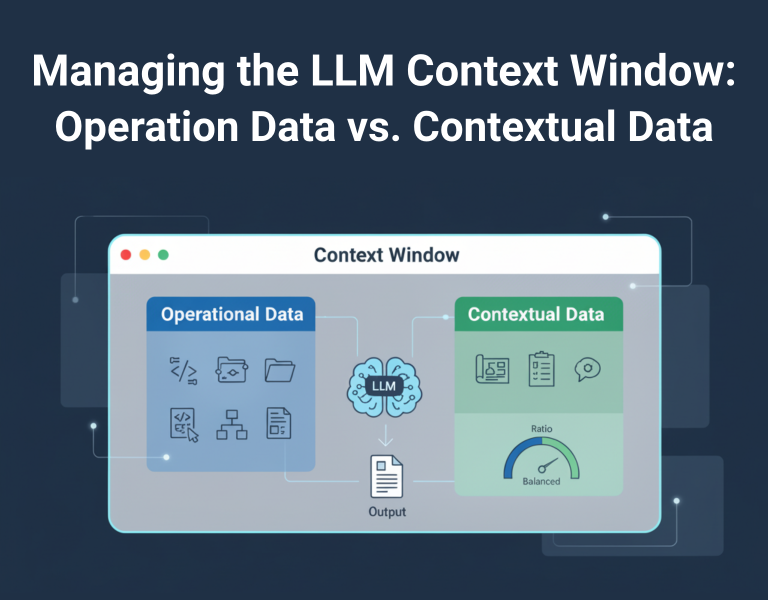

In the world of large language models (LLMs), the context window represents the amount of information a model can process at once during a single interaction. It acts as the model’s short-term memory, holding all the input data provided in a prompt, including previous messages in a conversation. Context engineering is the practice of strategically designing and managing this input to optimize the model’s output. This involves curating what goes into the context window to ensure relevance, efficiency, and accuracy.

Effective context engineering helps models generate more precise responses by balancing the volume and quality of information. Too little context can lead to vague or incorrect outputs, while too much can overwhelm the model, causing it to lose focus or exceed token limits. In this blog, we explore the types of context, the importance of seeding the context window properly, and a practical workflow for tasks like generating HTML files. We also discuss the critical ratio between contextual information and operational data to maintain stability in outputs.

Types of Context in the Window

Context in an LLM’s window can be categorized into several types, each serving a distinct purpose in guiding the model’s behavior. Understanding these helps in structuring prompts for better results.

- Operational Context: This includes the core data or files that the model needs to act upon directly. For example, in code generation tasks, this might be an existing HTML file that requires modifications. Operational context is actionable and specific, forming the “what” of the task.

- Background Context: This encompasses background information, guidelines, or specifications that inform how the model should process the operational data. It includes design documents, rules, or domain knowledge that provide the “how” and “why.”

- Instructional Context: Direct commands or prompts that tell the model what to do, often building on the other types. This is where follow-up prompts leverage the seeded context to produce outputs.

- Historical Context: Previous interactions in a conversation that build continuity, ensuring the model remembers earlier decisions or outputs.

These types must be managed carefully to avoid dilution of key information within the finite context window.

Seeding the Context Window: Substance and Amount

Seeding the context window through context engineering means intentionally populating it with the right mix of information before issuing a final instruction. This ensures the model has sufficient grounding to produce reliable outputs without hallucinating or deviating.

The substance refers to the quality and relevance of the context: specific, domain-aligned details that align with the task’s goals. The amount is about balance, avoiding overload while providing enough to cover edge cases. Proper seeding reduces errors, improves consistency, and enhances efficiency by minimizing the need for multiple iterations.

For instance, in a multi-step workflow, initial prompts can generate or refine contextual data, which then informs subsequent operational prompts. This layered approach leverages the context window’s persistence in conversations, allowing follow-up prompts to reference earlier seeded information implicitly.

Workflow for Generating an HTML File

A practical application of context management is in generating HTML files. This workflow uses context engineering to build a robust foundation, ensuring the final output is secure, consistent, and aligned with specifications.

- Generate or Import the Design and Technical Specifications: Start by creating a comprehensive document from scratch or basing it on an existing file for modification or style transfer. This document should outline visual design (e.g., layout, colors, fonts) and technical details (e.g., structure, responsiveness). Always include a cyber security section covering aspects like input validation, HTTPS enforcement, and protection against common vulnerabilities such as XSS or CSRF. Additionally, provide a software bill of materials listing all libraries used along with their versions (e.g., Bootstrap 5.3.0, jQuery 3.6.0). Define authorized access boundaries, such as role-based access controls or API authentication requirements.

- Refine the Technical Specifications: Use follow-up prompts to refine or update the specs based on user feedback. This step iterates on the seeded context, ensuring it evolves without losing prior details.

- Prompt Model to Build the HTML File: Finally, prompt the model to generate the HTML code, referencing the established specifications. This leverages the entire context window to produce a complete, compliant result.

This following workflow demonstrates how seeding builds a stable base, allowing each step to build on the previous one.

The Importance of Background to Operational Context Ratio

Achieving a stable outcome requires a balanced ratio of background context information (e.g., design and technical specifications) to operational context (e.g., the file being modified). A “good enough” ratio typically means providing more contextual details than raw operational data, often in a 2:1 or higher proportion depending on complexity. This ensures the context window has a high “topic/domain/subject/specification rank,” meaning the seeded specifics dominate the model’s focus.

Why is this ratio crucial? Insufficient background information can lead to unstable outputs, where the model fills gaps with assumptions or randomness. For example, without clear specs on DOM structure, the model might arbitrarily alter element IDs or classes, breaking existing styles or scripts. Similarly, it could switch frameworks or libraries unpredictably, introducing incompatibilities or security risks.

By seeding the prompt with detailed specs, you anchor the model to predefined rules, reducing variability. This is especially important in tasks like HTML generation, where consistency in IDs, classes, and dependencies ensures maintainability and security. A high specification rank minimizes hallucinations, as the model prioritizes the provided context over its training data biases.

Consider these benefits:

- Stability: Prevents random changes by enforcing specs.

- Accuracy: Aligns output with intended design and security standards.

- Efficiency: Reduces iterations needed to correct deviations.

The following table outlines background context to operational context volume ratios with resulting outcomes and issues.

Closing

In summary, managing the context window through thoughtful engineering transforms LLMs from unpredictable tools into reliable assistants. By categorizing context types, seeding appropriately, and maintaining an appropriate context vs operational data ratio, you can achieve precise, stable results in workflows like HTML generation. This approach not only enhances output quality but also builds trust in AI-driven processes.